Secondary Categories: 02 - Homelab

Summary

Current Setup

Its been quite some time since I last updated my home lab. Before making any upgrades my current setup consist of the following:

- DS1821+

- 32 Gb RAM

- 10 GbE Fiber with Dual SFP+ ports (One port in use)

- 4x 10Tb in SHR (RAID 5) - 30 Tb Usable Space

In my PC I have:

- 10 GbE Fiber with Dual SFP+ ports (One port in use)

- 96 Gb RAM

I was looking into making some changes of the network card and potentially faster storage. I do a lot of random reads/writes during my day to day, but majority of the changes I make come from a few focused directories containing the files that I edit.

Network Options Considerations

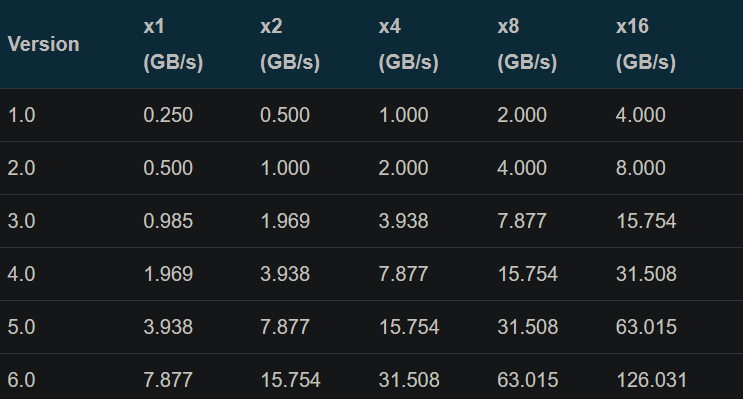

The Synology DS1821+ has a PCIe 3.0 (4 Lanes) x8 Width slot available for use which means that I have about 3.939 GBps available for use. Below is the speeds for PCIe versions and the number of lanes.

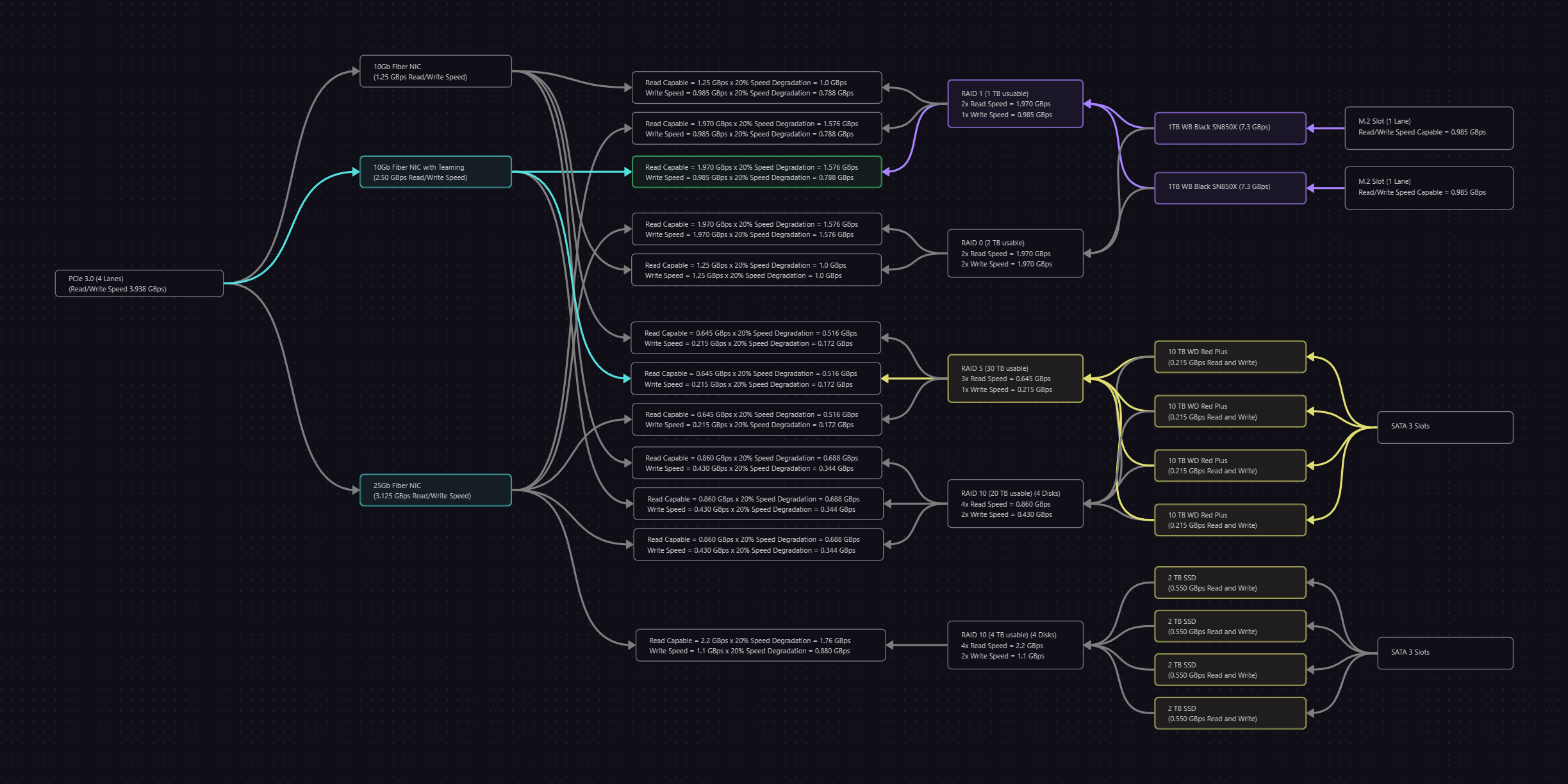

Lets look at the options we have based on the Synology compatibility. It looks like we can use Remote Direct Memory Access (RDMA) NICs in the Synology such as a 25GbE NIC plugged into our PCIe slot. A 25Gbps NIC can provide realistic speeds around 2.5 GBps. This fits just within specs of the Synology. Before we go any further lets take a look at our current storage speeds:

4x 10Tb WD Red Plus (215 MBps) + RAID 5

- 3x Read Speed = 0.645 GBps + 20% Speed Degradation = 0.516 GBps

- 1x Write Speed = 0.215 GBps + 20% Speed Degradation = 0.172 GBps

So in the case that I write from my PC over 25GbE or 10GbE I would be limited to the speeds of my current storage configuration.

What if we utilized the two empty M.2 Slots and used them as storage pools? By default Synology does not support and probably never will, non-Synology NVMe drives as storage pools on the DS1821+. Their reasoning is due to heating of the NVMe drives near the hard drives can cause potential long term issues… Its partially a concern, but this is also just another money grab for Synology.

Although we can get around this since there just so happens to be a lovely Github Repo that allows us create our own storage pools with the NVMe. We have the option between putting our NVMe drives in the following configuration: SHR, JBOD, RAID 1, RAID 0, ect. I’m only interested in either RAID 0 or RAID 1 so lets compare:

RAID 1:

- 2x Read and 1x Write Speed

- Minimum 2 drives with 1 drive redundancy.

RAID 0:

- 2x Read and Write Speed

- No Redundancy

So two 1 Tb Drives in RAID 1 will have 1 Tb of useable space. While in RAID 0 they would have 2 Tb of useable space, but if a drive fails then you lose everything.

Both RAID options offer 2x read speed so if your NVMe has read speed of 7.3 GBps and its put in either RAID 1 or 0 then you’d get around 14.6 GBps of read. Since NVMe longevity is based on the number of writes we make to the driver it would be best to have as few writes as possible and in the event of a failure we want to be able to recover the data. So RAID 1 may be the best choice. Although there is a catch to these slots… Each slot is 1 lane which means the read and write speeds max out at 0.985 GBps per each slot. So with the RAID 1 configuration this would be 1.970 GBps Read and 0.985 GBps write. In this configuration with the 25 GbE we could easily max out these speeds.

Another option would be to keep my current setup and and utilize NIC teaming of my dual port 10Gb fiber connection. In this case the max throughput of 10Gb NIC Teaming would give us 20Gb or 2.5GBps. This would also max out our NVME. Since there is a speed degradation of 20% we can realistically get around 1.576 GBps Read and 0.788 GBps with either single connection 25Gb NIC or dual 10Gb NIC.

Since it would about 38 on a new fiber cable and two SFP+ adapters. Its obvious that the best choice is to stick with what I already have.

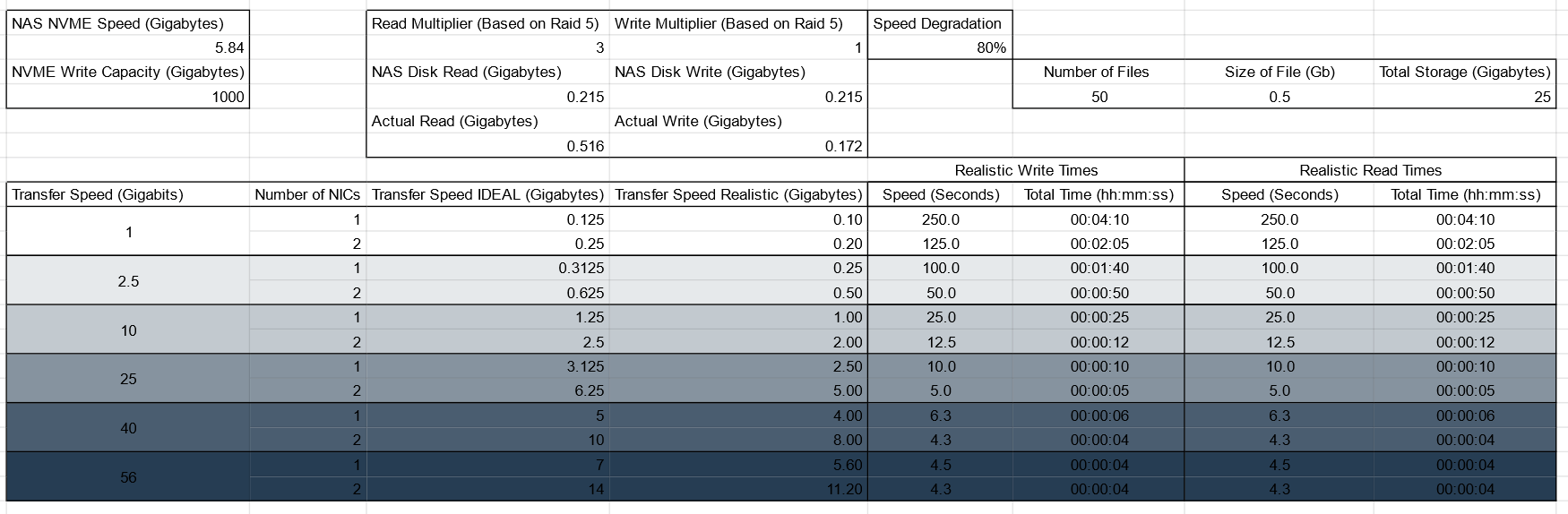

Below I created a calculator to help estimate and decide the best options for my setup. In this screenshot I assumed the following:

- 20% Read/Write Speed Degradation

- 50 Files @ 0.5 GB (25 GB Total)

- RAID 5 with 4x 10Tb WD Red Plus (215MBps Read/Write per each drive)

- RAID 1 with 2x 1Tb WD Black NVMe (1 Tb useable)

As you can see with NIC Teamed 10 Gb fiber I can write 25 Gb of files in around 12 seconds and read them also in 12 seconds. Anything above a single 25Gb NIC is not useable since the PCIe is limited to 4 lanes of speed.

What about the Internet Download and Uploads?

I currently have 1Gb fiber internet at my house. So I get 1000 Mb/sec with a speed degradation of 20% it comes to 800 Mb/sec which is 95MB/sec wired connection.

As an example in the last table we can see that a 25GB file will take 4 minutes and 10 seconds to download to my PC. I’m technically bottlenecked due my internet speeds, but once its on my system I can use my dual 10Gb Network Card to transfer it to my NAS in 12 seconds. So in total 3 minutes 48 seconds faster or about 21x speed increase.

You can test out your own network using Network Download Test Files and compare it against my calculated table. I tested my network and used the 2GB file and it completed in 20 seconds at 95MB/sec. This matches exactly to what was estimated in my charts above.

Future Improvements

In the future I could improve my setup by getting SATA SSD drives that get about 550 MBps read and write per each drive. Instead of putting them in RAID 5 (SHR) I would put them in RAID 10. That way I can get:

- RAID 10 (4 TB usable) (4 Disks)

- 4x Read Speed = 2.2 GBps + 20% Speed Degradation = 1.76 GBps

- 2x Write Speed = 1.1 GBps + 20% Speed Degradation = 0.880 GBps

Its a slight performance increase, but for my use case its not really needed which is why I decided to just get some NVMe drives and create a storage pool for now.

Conclusion

Depending on your setup and use cases you may or may not need these kinds of speeds. That’s why you have to assess each build on a case by case. In my case I would like to be able to smoothly run VMs off the NAS or transfer multiple large videos too and from my NAS. I also used this upgrade to learn more about RDMA and PCIe.

I also created this diagram to show me all my options. The highlight cards and arrow display the choices that I made based on what I came to the conclusion of after my research:

Resources

- Wikipedia RDMA: https://en.wikipedia.org/wiki/Remote_direct_memory_access

- 10Tb WD Red Plus Spec Sheet: https://documents.westerndigital.com/content/dam/doc-library/en_us/assets/public/western-digital/product/internal-drives/wd-red-plus-hdd/product-brief-western-digital-wd-red-plus-hdd.pdf

- 25Gb NIC: https://network.nvidia.com/sites/default/files/doc-2020/connectx-3-pro-ethernet-single-and-dual-qsfp+-port-adapter-card-user-manual.pdf